In a world where even the most remote locations have internet access, why do we need EDGE AI, Physical AI, or TinyML? That’s the question I’m going to try to answer in this article.

Let’s start with some definitions first.

Embedded

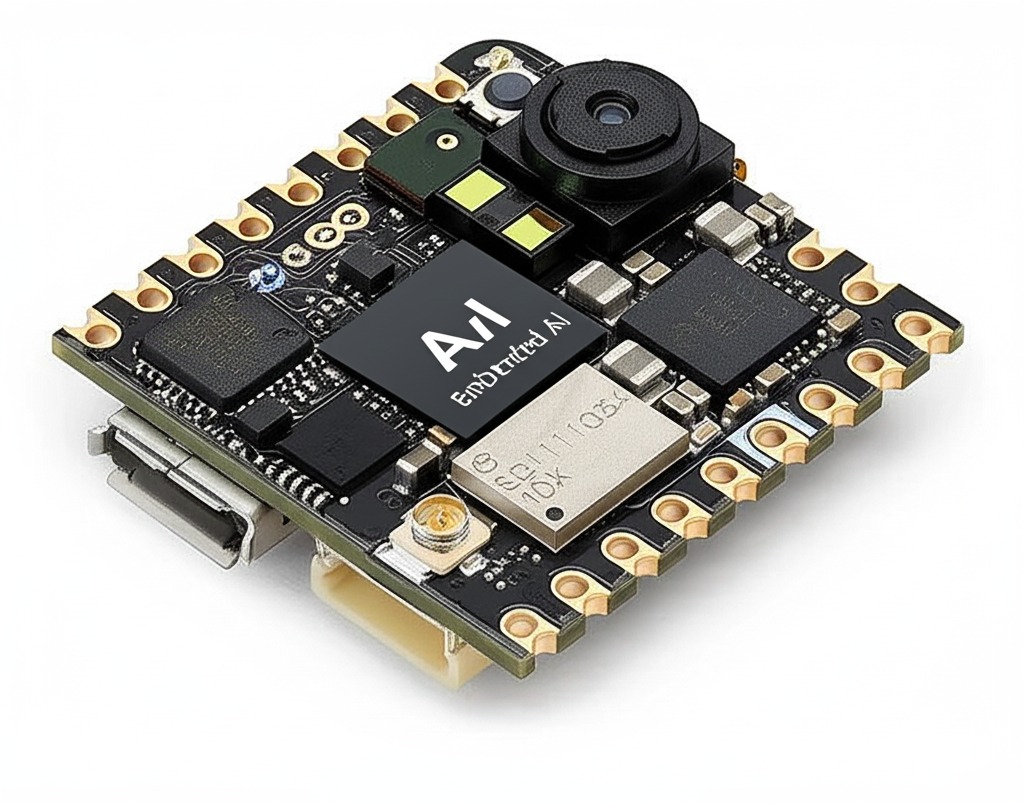

Embedded systems are the computers that control the electronics of all types of physical devices. For example, at CONAUTI LATAM, we use Nicla Vision, which helps us capture contextual data such as video and sound. Along these lines, embedded software is the software that runs inside these electronic devices.

Embedded systems are usually designed for a specific, dedicated task. For example, performing the ETL (data extraction, transformation, and loading) process in a machine learning workflow.

Finally, AI programming, embedded systems, is the art or science of deploying AI models, whether machine learning, deep learning, or generative AI, on electronic devices with limited flash memory, RAM, and AI accelerators. Examples of the hardware we use at CONAUTI LATAM are Nicla Visión, Portenta, and Raspberry Pi.

Edge Devices

Edge devices are those that perform computational calculations on the local device, not on a cloud server. This is also known as Edge Computing.

One of the biggest benefits of having a network of edge devices is that the data obtained from the real world remains local.

Edge AI

So, edge devices are embedded systems that provide the bridge between the digital world and the physical world. Remember that data is the raw material we need for the training and inference of Machine Learning, Deep Learning, or Generative AI models. An important point to note is that unstructured data, such as video and audio, differs from structured data, data that comes from sensors. The latter tends to have high volumes but relatively little useful information.

For example, at Edgemant Conauti for predictive maintenance, the IoT devices we use are typically seen as devices that only collect data through their sensors and send it to servers to be later used in the inference process by our specialized maintenance workers (AI Agents). The problem is that this data isn’t always useful, and storing it for later AI inference is expensive, even more so if it’s stored in the cloud.

The solution, then, is Edge AI. At Edgemant Conauti, instead of sending the data to a server, whether local or in the cloud, we bring the ML, DL, or AI Agent models to the hardware where the data is obtained. This allows for on-device data analysis and only sending useful data to the local or cloud server, significantly reducing energy costs for sending the data and data storage costs.

In conclusion, Edge AI means making decisions at the edge, close to where the data is obtained.

Embedded Machine Learning

It is the art and science of deploying AI Agents, machine learning models, or deep learning on embedded devices with RAM, flash, or storage constraints, and accelerators such as GPUs or TPUs.

Now let’s move on to the answer:

Why do we need Edge AI?

Let’s use our BLERP framework:

B: When we don’t have bandwidth or a good internet connection.

L: When the latency requirement (total time it takes for the AI Agent to make inferences) is high.

E: When we want to save money. Reduce costs and energy for moving and storing data.

A: When we seek security of access to data and Agents. We seek to comply with data protection and processing laws and regulations.

P: When we seek data privacy.

In conclusion, with Embedded Edge AI, data does not need to leave the device or physical location to be processed by AI Agents. This increases the relationship of trust between the user and the product, giving the user absolute control over their data.

You can read more on our blog about Edge AI, Physics AI, and Tiny ML. Click here (EDGE AI BLOG)

Enrique Suárez.

Leave a Reply